ML-Agent Quadruped - Learn a quadruped robot how to walk using reinforcement (C#)

Introduction

What is Walk ? First of all, in four-legged animals, the movements are carried out thanks to the action of successively lifting their legs.

When one or more members are lifted, the quadruped must coordinate the other members in order to maintain support. The sequence of different combinations of support correspond to a gait.

The most common gaits include walking, trotting and cantering.

In a quadruped movement, there are several phases that contribute to the movement :

- laid down: limb in contact with the ground.

- raised: end of the limb (foot) in the air.

A succession of laying and lifting corresponds to a cycle of movement.

Of all the gaits, walking is the slowest. At a normal walking speed, a quadruped is always leaning on 2 or 3 limbs, which makes it a very stable gait. Lateral and diagonal supports are used.

Development

What is the Crawler ?

Crawler is a project included in ML-Agent. This project reproduces the task of walking with different constraints than ours. It is however interesting to use this project as a reference.

Contraints

As said before, we will have different constraints from the crawler project.

In order to create a physical version the robot we will have multiple contraints. The first one is a limit on rotation axis. We will use servomotors to build the physical robot and they are only able to performs rotation on a single axis with a limit of 180 degrees ( 3,14159 radian ).

Servomotor reference we will use to build the robot.

Rotation axis and limit for the above servomotor.

3D Model

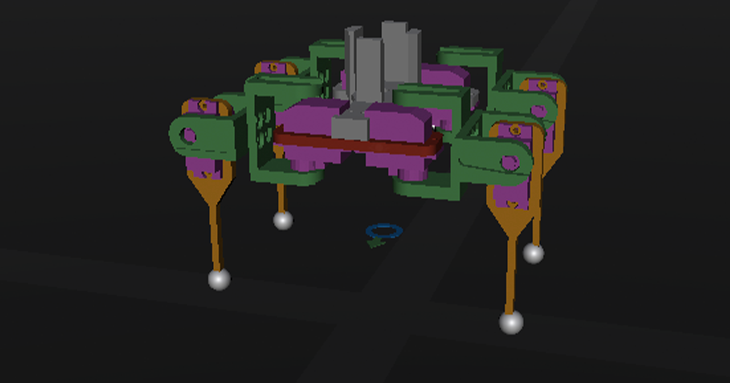

In order to create both a physical and digital robot, we need to create a 3D model which can be imported in Unity.

Based on this Robot 3D Model, we made some changes using Blender (3D software) to fix pivots points, rotation and scale of each pieces of the model. We also added fake models of servomotors.

After merging all this 3D model on unity we can get the below result.

In order to have a robot which is able to work with ml-agent we need to follow a structure close to the crawler one.

We also need to add a three elements which are included in the crawler project.

These elements are a Dynamic Platform , a DirectionIndicator and a OrientationCube which are used by ml-agents to get information about the robot and the target.

Structure of the Scene with all needed components.

Robot Unity physics & Environment

We will use Box colliders to configure the colliding area of each part of the robot ( body, shoulder, leg ).

Example of Box collider (Size and center are calculated automatically).

Some rigids bodies were also added to each pieces of the robot.

Rigid body is useful because you can configure the mass and enable the gravity for this item. It’s a required element to create a ml-agent environment which uses physics.

The mass need to be configured for each body part with the real weight (kg)

Each legs and shoulders of the robot needs a configurable joint to simulate a servomotor. Any angles limits and axis can be configured with a configurable joint. We will only activate 1 axis with a limit of 180 degrees ( 3,14159 radian ).

In the configuration of these configurable joint, we will pass the parent RigidBody for each parts:

- For the shoulders , the parent is the body

- For the legs, the parent are each shoulders.

We also need to set the rotation drive mode to Slerp and “Position and Rotation” in the Slerp Drive config with a projection Distance set to 0 ( which will replace the body part if the physics break the axis).

Unity Scripts ( ML-Agent)

As said previously we will use a lot of scripts which are included in the crawler project.

Behavior Parameters: this script define the type of behavior the Agent will be using.

It defines these parameters:

- Vector Observation space: observations variables corresponding to position, rotation, velocity, and angular velocities of each limb plus the acceleration and angular acceleration of the body.

- Actions: actions the agent can choose, corresponding to target rotations for joints. In our project we will have 8 continuous actions, 1 action for each servomotor.

Crawler Agent: this script define how the agent is set.

- Type of goal: The agents must move its body toward the goal direction without falling.

- CrawlerDynamicTarget - Goal direction is randomized.

- CrawlerDynamicVariableSpeed - Goal direction and walking speed are randomized.

- CrawlerStaticTarget - Goal direction is always forward.

- CrawlerStaticVariableSpeed - Goal direction is always forward. Walking speed is randomized.

The Crawler agent contains a method called OnActionReceived which set action choose by the agent for each body part. In our case we need to set actions to the correct axis.

This script also set agent observations which are sensors used to get data in order to train the agent. When we will build a real robot we will need to keep only observations which can exists in the real world.

This code add some observations for the agent (target orientation, velocity, touching ground).

The last thing set by this script is the reward given to the agent. Reward are given if the agent performs some actions.

If the agent touch the ground with the body and not a leg, we will give a negative reward.

If the agent touch the target or move with a velocity close to the goal velocity we will also give a positive reward.

Ground Contact: is a script which is use to observe if any body part touch the ground and performs punishment the agent for making undesirable contact with a custom reward.

Train a custom model using SAC.

Instead of using PPO to train our agent as in the crawler project we will use SAC (Soft Actor Critic) which is an algorithm available in ml-agent. Soft actor critical is an algorithm more suitable than PPO for use in a real environment.

In order to train a model we will use mlagents-learn, this command requires a build of the unity project to run a training process.

It also requires a configuration file (.yaml), which defines hyperparameters to train the model and the algorithm type, (ppo, sac).

To start a training with mlagents-learn you need to run the following command.

mlagents-learn CrawlerDynamic.yaml –run-id=CrawlerBlog –no-graphics –env=RobotBlog.exe –num-envs=8 –force

-

—env : corresponds to the Unity exe. -—run-id : name of the folder which will contains both the model, logs, and results.

-

CrawlerDynamic.yaml is the name of configuration file.

-

–num-envs : defines the number of simultaneous training environment .

-

–no-graphics: Disable the display of environments to improve performance.

Results

After a training you can check the log and display these graphs using tensorboard.

Demo