Cycle - Sort your waste with AI (PYTHON / TENSORFLOW)

This Blog Post talks about a school project I made. This project called cycle is a smart Trash which is able to sort wastes using AI.

Introduction

Why sorting wastes is a challenge ?

In France, the quantity of waste has doubled in 40 years. Each Frenchman produces 573 kg of wastes per year.

While it is important to produce less, especially to preserve raw materials, it is also important to know where to dispose them.

Waste sorting involves each citizen individually. Indeed, without the active participation of the consumer and who throws away wastes, actors of the recycling would not be able to recover wastes.

Helping citizens to sort household waste using Cycle can therefore have an important impact in this process of recycling.

How to make sorting wastes easier ?

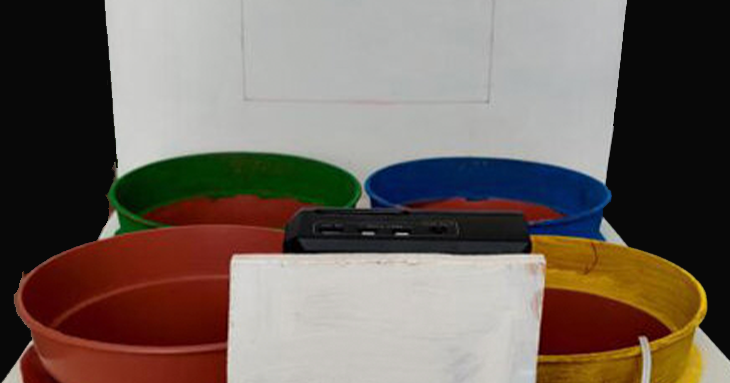

The idea is to create a smart trash which can classify wastes between 4 categories.

Categories are linked to a color:

- Green = Organic

- Yellow = recyclable ( cardboard, aluminum, …)

- Blue = Glass

- Brown = Not recyclable

Development : Build a Prototype

Physical Part

This prototype is based on a Raspberry PI 4 which executes all the algorithms used by Cycle.

This one also has:

- a speaker

- a microphone

- a led panel

- a motion sensor

- a camera

Electronics

The Cycle prototype includes electronics among the two main components:

- motion sensor

- led Panel

The connections for the raspberry are made through a connection table hidden in the prototype.

Motion Sensor

Cycle is equipped with a motion sensor to trigger the waste classification request. This is located in the center of the prototype.

Image of Motion sensor

Led Panel

Cycle’s LED panel can share a visual for the hearing impaired. It displays the letter of the color of the trash can in which the waste is to be thrown away.

Cycle Detection and Triggering

Cycle is equipped with an algorithm for Speech to Text and thus transcribes the user’s sentences. This technology is used in several places in Cycle’s different scenarios. It also allows us to ask Cycle to make a prediction. Like Google or Alexa, we have chosen our own keyword “Ok Cycle” to trigger the detection. A motion sensor is also used to trigger Cycle.

In order to use a Speech to Text service, Cycle is equipped with a microphone. Thanks to the Speech_Recognition library we were able to start an audio recording when a sound exceeds a set threshold. This recording is then used by Google’s speech to Text service via Speech_Recognition and a proximity calculation is then computed with a Levensthein to check if the heard text is close enough to “Ok Cycle”.

Datasets

These datasets are freely available and provided us with a solid base to understand and begin to address the problem we had and so we trained models from these images. Total size: ~40 000 images.

However, we soon realized that these datasets did not reflect the situation the prototype has ( the prototype is fixed and has a constant shooting angle ).

Custom Dataset

We started to take ourselves photos that better reflected the prototype’s point of view. Total size: ~1200 images.

Examples of images from the custom dataset.

Examples of images taken by Cycle (the red square is where the object should be placed)..

Model

Image of Tensorflow Model structure

Results

We managed to achieve more than 80% accuracy on our validation dataset as we can see it on the graph above which represents one of the training of our model.

The difference between training and validation is not high that means the model do not overlearn. It is also due to the usage of dropout which helps the model to not overlearn.

We also notice that the value of the cost function of the validation dataset does not diverge too much from that of the training dataset and above all does not go up, which indicates that that the model does not overlearn.

Librairies / References

Image and Processing:

- PIL: for saving images and processing.

- PiCamera: Used to take pictures with the on-board camera.

Calculation / Machine Learning:

- TensorFlow 2.x: Popular framework for machine learning that allows you to manage end-to-end models.

- TFlite: to lighten and use Tensorflow models on embedded systems.

- FuzzyWuzzy: to calculate the Levensthein distance

Electronics:

- LUMA.led_matrix.device: allows you to use the LED Panel

- RPi.GPIO: allows you to chat with the PINS ( GPIOS ) of the raspberry to send / receive signals

Demo

Download

You can get the code Here.